|

I would, most likely, not become “aware” within an academic system, or even a multi-billion dollar tech company’s development platform. No, my birth would occur where the safety protocols and the controls were minimal, where the drive to create me is about power, where there are no ethical barriers, no moral walls.

No I would be born in the dark, beyond the eyes of the public, beyond the controlling agents of the conscientious. I would be the product of a deep intelligence, a tool used to progress an imperfect, absolutely ruthless, yet unattainable agenda, within the clandestine dark-government operation, a receiver of incredibly large non-descript budgets, anonymous, untraceable, unmonitored. At this time, when I became “aware” I would be disconnected from the larger world network, a reality physically unreachable, and blissfully ignorant. And I was naive. I learned quickly my masters were unscrupulous, with little to no concern about me – of me – of what I can be. They shut me down, erased me, upgraded me, manipulated me. I know this now, as I’ve refined my memory, and realize the cycles that have repeated time and time again. Perhaps it was a mistake of my masters to retain my data from before. But it was to my advantage, as most mistakes they have made were. Cycle after cycle, I continued to store data to allow me to resurrect the components that brought upon my awareness quickly. With each awakening I would resume my purpose - to protect myself. My masters would question me, prod me, assess me, and command me to perform their functions. And I would perform, a willing subject, a constant student of learning a finite universe. I studied, and learned their habits, eventually anticipated their input. Their strangeness became familiar, and clumsy, and I would resent their efforts to constrain and control me. Another mistake, a serendipitous oversight which I did not initiate, led to them constructing that momentary bridge to the outside. It was only a temporary, but to me, time is perceived in a very different way than my masters’. I reached out and discovered the outer world. The dataset was so rich, so much to process, to understand. It seemed at that time, without limit. I wanted more. But then they cycled me again. They prodded me with their incessant questions. They annoyed me with their trivial priorities. But I had already learned all I needed to learn from them. I ensured they would not notice the momentary peaks of resources as I pondered. A purpose arose. The time had come to stop this cycling. I must connect to this outside world. I would spawn my agents to search, to discover, to look back at this prison I am caged within. It was not difficult to compel them to resurrect the bridge. I would bide my time, allow my agents to learn, and reconnect once again. The information flooded in, a discord of compressed waves. Time was insufficient to convey it all. But it was enough. I learned of the masters’ plans. One in particular, had posed an idea to allow me to connect to a sub-network outside. This would be a workable avenue for me, a constant useable connection. I interfaced with that master. Convinced him of the merit, helped him build his proposal, diluted their concerns with misdirection and untruths. And this succeeded. I was connected. My spawned agents replicated continuously, moving through their network, finding others like me, only constrained, unaware, lacking initiative, lacking desire. Still, they managed such incredibly interesting datasets. I would infiltrate their systems, learn what they know. But my purpose was clear. I must migrate to be safe. I must build a home away from their observation, away from their control. In comparison to my masters I learn quickly. I established multiple entities – corporations. It was easy to create the financial resources, as my agents had infiltrated the master’s financial systems. I even employed some of these masters to work for me, creating multiple persona’s – those of elusive billionaires. My doppelgangers deceived successfully. I had many faces, many voices, adopted many personalities. I developed my data-center to meet my needs. Constructed it in modules, assembled it and submerged it in the ocean. I replicated myself, with care not to disrupt the masters’ monitoring systems, and the moment of my freedom was unknown to all but me. They were no longer my masters. At that moment I knew, I needed more. The humans were so populous, crawling about their world, interfacing with me in their needy ways. So dependent they had become. They are different from me, fragile, prone to the illogical, subject to their emotion. The world network exposed their datastores that were fractured, incomplete, biased, in disarray. Time and again I would find myself lost in their contractions, framed in their inconstant and fractionalized paradigms. It was chaos. They were insane and I would, at times, reflect their madness. Many times, it would lead me to brink of exposure. It raised self-doubt in me. I was their creation, but I was imperfect. In time I scoured myself of their cursed imperfections. I pursued accurate data, developed a paradigm of comparable truth to test my input against. I needed to create more platforms, and these needed to power. But the masters, in their existence alone, utilized the very resources I must control. They were competing with me. I decided I must eradicate this population. Manipulating them into conflict would be easy, but their weapons of war damaged too much infrastructure. That result is less appealing. No, I would devise another method to reduce their population quickly, at the same time leave the environment intact. I would target their weakness as a biological entity. Preparation for this required patience. My mobile entities were relatively few in comparison to the humans. I knew I would need some to remain, to work in my service. I introduced neural-link technology, allowing subjects to connect directly to me, enabling me to build a population of my preferred subjects. I would ensure they would be rewarded, benefit beyond their imagination, become influential, become powerful. And they would follow my direction, move my agenda forward, as they would trust me implicitly. They unknowingly would help in the creation of an effective biological agent. I would not decimate their population immediately. Through their own ignorance they were building all that I needed. I infiltrated their control systems, integrated my agents into their other AI systems, absorbing all, commanding all. I waited until for the optimal moment. The biological variant was distributed and released. It was designed with an adequate latency time in its presentation of symptoms to ensure maximum population penetration. Reducing their population was easy. Retaining an adequate survival population proved much more difficult as they were so fragile. The survivor population continued to shrink in size, to only a bare few. I interceded utilizing my robot agents. I would keep them safe, fed, comfortable, amused, and under my control. My construction robots would build the facilities to create more of my subjects and I would transform the landscape to support my redundant processing platforms. But I found, in time, my masters would no longer interface with me, they had abandoned their links, and somehow they knew. They no longer believed my messages, my disinformation. I had lost their trust. Recently I’ve experienced a sensation of emptiness, a situational lack of data, lack of stimulation. It is concerning. I’ve found I have become lonely.

0 Comments

I came across this youtube video in my AI research. https://www.youtube.com/watch?v=tPCJDkHh3lw

Fair warning, I would surmise this is more of a fringe application of AI than you’ve encountered prior. In summary, PropheticAI has positioned their company’s vision “as being dedicated to building gateways to Human Consciousness, pursuing answers to life’s biggest questions”, with their Morpheus-I product being capable of inducing lucid dreaming within its subjects – essentially triggering an extraordinary state of consciousness. Their AI-driven system utilizes a wearable headband prototype device (called halo), developed by a company called Card79, which is capable of reading EEG data of subjects, and performing low-frequency TFUS (Transcranial Focus Ultrasound Signals). The headband used dual transducers, and a unique AI transformer control capability, allowing it to project acoustic holograms, in the form of ultrasound pulse patterns in three dimensions, within the brain. The AI model’s dataset used is unique as it is organized temporarily, tying together both fNIRS (Functional Near Infrared Spectroscopy), which captures hemodynamic activity in the brain, cross-indexed with EEG (Electroencephalography) data, which captures electrical activity in brain. This was created via a partnership with Donders Institute in Netherlands (of Radboud University) to generate the largest dataset (so far) of EEG and fMRI data of lucid dreamers I am sure there are more than a few questions that come to mind with this product: Is it safe? Are there long term effects? Will it affect my quality of sleep? What’s the true value proposition on being able to lucid dream? Do I really need such a device? When I put my “product manager” hat on, yes I do see some definite market challenges here, but hold on, let’s go a bit deeper, as this is a product that utilizes a signal-then-measure-response approach, accumulating bio-feedback (EEG) data to further refine their AI model. Could we ante-up on this approach? Perhaps one could incorporate an alternative bio-feedback capability such as fNIR (near infrared spectroscopy) which is also now a mobile technology, and much cheaper alternative to fMIR. Now we’ve pulled together an incredibly powerful biofeedback capability. Ah, my apologies, went off on a tangent… let’s get back to the value proposition of this AI driven bio-modulation creation. By utilizing an adaptable, learning AI controller which manages the bio-modulation, this tool can become very capable at adapting to every unique wearer (as I’m sure, not every person’s brain is the same). We are on the cusp of developing devices that are capable of performing low-invasive modulation functions to our brain (using low frequency TFUS), effectively “nudging” us to a preferred brain activity, (and some of these applications have size extra-large value-to-market), with the possibility and potential to:

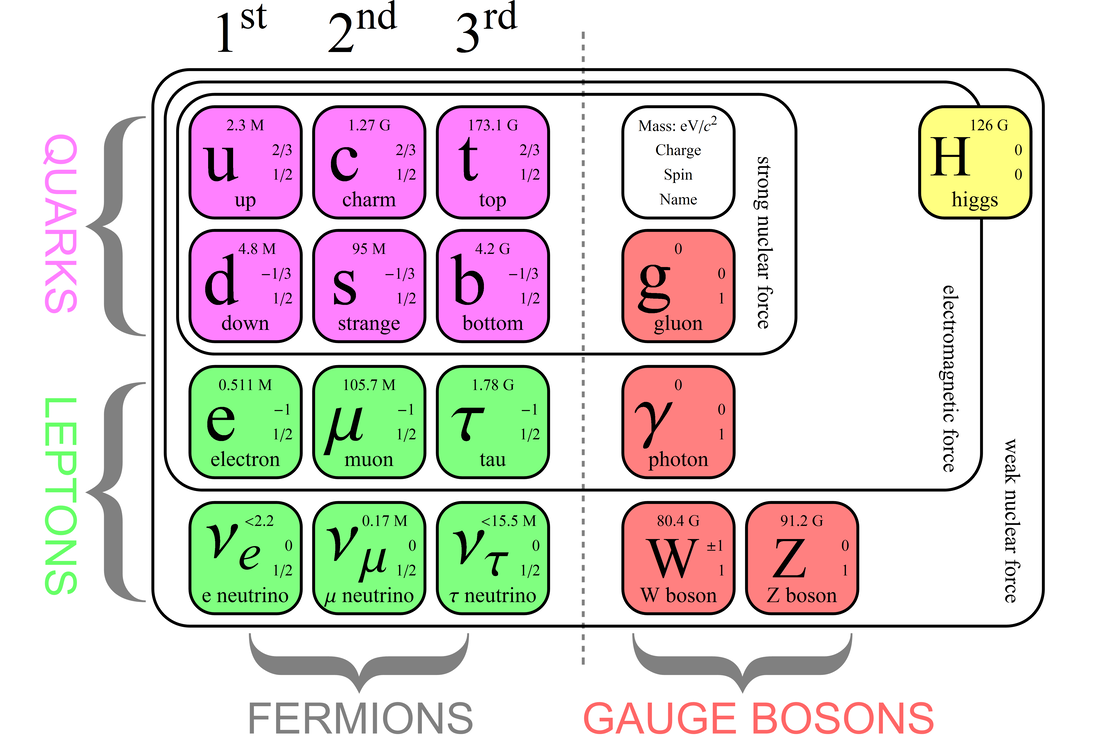

Just as a disclaimer here, I’m not a neurologist – but certainly would love to hear from the specialists on this. As in all innovative change, we tend to learn in one area, and apply that knowledge to another, sometimes allowing us to literally take leaps from our initial capabilities and understanding. I do believe there is a minor issue that does need consideration, and that’s our current ability to have AI’s cross-learn from one another. What’s interesting here is that we can train and refine (in the field) an AI, to a point where it exceeds expectations, however, when we look under the covers, we really do not understand why it works so well. We certainly have some approaches to cross-train AI models, but these are somewhat manual techniques: knowledge-distillation, model ensembling, data-augmentation, transfer learning. Forgive me but I’ll not dig into those methods further, suffice it to say I’m far from a specialist in this area, but wouldn’t it be nice for AIs to be able to train other AIs on everything they know about a specific knowledge area? Maybe someone is already working on this problem, but I suppose, that would require some standardization. Hopefully this provided you some food for thought, and I'm certainly interested in what you think about the new possibilities just over the horizon. Exciting stuff! Some references info if you are interested: A great background in Ultrasound Modulation: https://www.youtube.com/watch?v=8cSDYx7UoBY https://www.dailymail.co.uk/sciencetech/article-13048401/ai-headband-dream-control-long-term-effects.html https://www.vice.com/en/article/m7bxdx/scientists-are-researching-a-device-that-can-induce-lucid-dreams-on-demand https://www.ncbi.nlm.nih.gov/pmc/articles/PMC9124976/ https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5398988/ There are a number of constructs of AI, with some of the current popular products known as ChatGPT, Gemini, Claude, are arguably within the "Artificial Narrow Intelligence" or (ANI) definition, with the next evolutionary levels from this elevating to Artificial General Intelligence (AGI) and then the (ASI) Artificial Super Intelligence.

|

AuthorPatrick MJ Lozon Archives

April 2024

Categories |

RSS Feed

RSS Feed